Since I publish this blog post,

a lot of folks contacted me for more information, source code and

overall encouragement to improve on the project. So I started to work on

a better prototype and will document my findings as I go along.

(this is work in progress, check back regularly for newest developments)

What is MicLoc anyway?

MicLoc

is an effort to develop a device capable of

passively identifying a sound based event position on a given map,

therefor pinpointing its location. The whole idea is to

achieve this goal with

everyday electronics and reduced development costs.

With the event of small, affordable, powerful

microprocessors and electronics in general, this technology now seems

accessible to potential commercial applications and general public

use.

The main goals of this project are:

- Develop a low cost, compact device capable of identifying a

source source location on a map with sub-meter precision.

- Develop, detail and open-source the hardware and plans used

so anyone can build this device.

- Develop, detail and open-source the software needed to

interface the device with a computer.

How it works

To understand how a

source source can be located, we must first understand sound itself.

“Sound is a vibration that propagates as a

mechanical wave of pressure and displacement, through some medium

(such as air or water). Sometimes sound refers to only those

vibrations with frequencies that are within the range of hearing for

humans or for a particular animal.”

As with any wave, sound travels at a given

velocity. If we can measure the time, in different points in space,

at which the sound wave arrives we can deduct the origin of where

that sound was first generated.

Lets assume sound travels at 340 meters per second

(the actual speed of sound depend on several factors). When a

sound is generated, it propagates in a spherical motion and after one

second, it arrives at the border of an imaginary sphere with 340

meters of radius. For simplicity, lets think about 2D maps.

In this example we illustrate the previous

proposed scenario. If we have one sensor and the originating time

when the source was generated (T0) we know that the sound has to have

an origin at the edge of the green circle with radius 340x(T-T0) with

the center at our sensor.

With one sensor we only know what distance the

sound source is from the sensor. When we add multiple sensors we can

figure out exactly where the sound source is, it will be where all

circles intercept with each other.

The only intercepting point common to all sensors

is 3.

This is called acoustic location via

trilateration.

There are other methods for locating sound

sources, which involve other properties of a sound wave, sensors or

the math used to infer the emitters position.

This is one way to explain how one can

locate a signal with several sensors.

The method proposed in this project is slightly different.

The reason is simple: we don't know when

the signal was generated in order to start measuring time. We just

know a signal was originated when it first arrives at a sensor. This

is T0 for us.

So we have to work

with the time differences between each sensor receiving the signal, which is usually called multilateration. The setup will be essentially a mini sound ranging device.

Considerations, limitations and challenges

There are several aspects to be considered when developing this

project.

a) The device has to know its location and its sensors locations.

This means either a manual configuration (fewer costs) or

gps-awareness (greater costs) or both.

b) The device must be able to have a very high sampling rate of

its sensors.

c) The device must be able to communicate with the outside,

ideally via wireless.

Wifi, Xbee, Bluetooth or others can be considered here.

d) The device should be able to have a decent battery autonomy.

(need to define decent)

e) Sensors location and configuration are of major importance and

have to be carefully considered.

f) The number of sensors affect the precision. The more sensors,

more precision. But usually we cannot sample all sensors at the same time, so

the more sensors, less sample rate so less precision. An optimal

configuration must be studied for the device.

Phase 1

Given

all factors here, I figured that there were just too many variables to

just blindly decide on a configuration for the device. How many mics

should it have? What's the minimum sample rate acceptable? How far apart

should the mics be?

In

order to get a more precise idea and answers for these questions I

developed a small simulator where it is possible to test several of the

previous factors.

In

the simulator one can visually see what happens when the mic

configuration changes, the sample rate changes or the sound speed

changes. The red dots represent the mic locations, the purple dot the

sound event position and the blue pixels is where the DTOA (diference of

time of arrival) starts to get close to the real solution. The bluer it

is, the closer it is to the solution.

The

white pixels near the real location are marked as 'possible' solutions.

This basically means that for the sample rate used, there is not enough

precision to measure accurately and differentiate between the real

solution and a proposed one.

This represents the very best case scenario. Real world introduces another set of problems like sound sample quality, measurement errors and so forth, but the simulator aims to provide a basis for the project. In particular, figuring out the minimum sample rate which should be used as well as the distance between mics and their configuration.

You can get the eclipse source code and runnable jar at GitHub. As always, suggestions/corrections/comments are most welcome.

As expected, sample rate plays a major role in the project, as well as the distance between mics. The higher the sample rate, the higher the precision is. Same for mic distance. The relationship is not linear either, as one might expect.

Using the simulator, we can test a setup with 4 mic, placed in a square of 200x200 millimetres. By varying the sample rate we get an estimate of possible locations per benchmark run (lower is better). Doing another analysis and maintaining the sample rate at 300kHz, it also possible to test how increasing the distance between mics affects the precision.

When the sample rate doubles, the precision seems to roughly quadruple.

The distance between mics has even more impressive impact on performance.

This is great news if you want to build a big device, by increasing the distance between the sensors, you can greatly increase precision. Unfortunately for me, at least for now, I want to build a compact device, so I must juggle with the sample rate and number of mics.

Phase 2

Teensy is a great

platform. The new version 3.1 features some interesting

characteristics that got my attention. One of them is that it has 2

internal ADC converters. This means taking 2 samples at the same

time. Major bonus! It's fast (Cortex-M4 CPU can run at 98Mhz), almost

100% arduino compatible, inexpensive, full of a wide range of

features and has a pretty active forum. I seriously recommend

checking all its features, it's really nice! I think of Teensy a bit

like “arduino on steroids”.

Teensy is a great

platform. The new version 3.1 features some interesting

characteristics that got my attention. One of them is that it has 2

internal ADC converters. This means taking 2 samples at the same

time. Major bonus! It's fast (Cortex-M4 CPU can run at 98Mhz), almost

100% arduino compatible, inexpensive, full of a wide range of

features and has a pretty active forum. I seriously recommend

checking all its features, it's really nice! I think of Teensy a bit

like “arduino on steroids”.

The buffer is 2048 bytes sampled at ~454kHz, the grey line shows when the threshold was crossed. Given the Teensy sampling at around 454kHz, the greatest diference between mics srqt(25*25+25*25)=35.355cm, minimum sound speed lets say 320 m/s, the maximum distance in samples from the start of the sound in one mic to the most distant one is given by 353.55/320*454 ~= 500 samples. So my choice was around 4 times that, or 2048 bytes.

General formula for the minimum samples is Dm/SS*SR, where Dm is the greatest distance between mics in millimetres, SS is the speed of sound in m/s (which is the same as mm/ms) and SR is the sample rate in kHz (which is the same for samples/ms).

This is something to consider when recording samples, the bigger the distance between mics the more memory you need in your hardware platform. Same for sampling rate.

Aligning the samples

In order to locate the origin of that sound, we first need to calculate the TDOAs (Time Difference of Arrival) between each signal. In an ideal world, that would be trivial. Just slide the samples of each mic, subtract one from the other until all points are 0. Even better, if there was no noise, just find the first sample with sound <> 0.

Correctly calculate TDOAs is, of course, of paramount importance for the precision of the device.

If you look at the image above, you can see the sound waves are similiar, but not equal in format or amplitude. And this is a sample with low noise. I tried several approaches to TDOA calculation, and the one that yielded the best results so far is using Cross-Correlation with time-delay analysis.

The distances between each vertical line gives us the TDOA. We can just assume the first line (in RED) to be T=0 and calculate from there the time differences to the others.

If you notice, the green line does not look good at all. The problem is that the samples where not filtered for noise nor normalized. For that I programmed a filter that normalizes the samples, reduces the noise and weights the sample values in relation to the distance of the probable start of sound. The output will then look like:

The sound waves now seem properly aligned.

As expected, sample rate plays a major role in the project, as well as the distance between mics. The higher the sample rate, the higher the precision is. Same for mic distance. The relationship is not linear either, as one might expect.

Using the simulator, we can test a setup with 4 mic, placed in a square of 200x200 millimetres. By varying the sample rate we get an estimate of possible locations per benchmark run (lower is better). Doing another analysis and maintaining the sample rate at 300kHz, it also possible to test how increasing the distance between mics affects the precision.

When the sample rate doubles, the precision seems to roughly quadruple.

The distance between mics has even more impressive impact on performance.

This is great news if you want to build a big device, by increasing the distance between the sensors, you can greatly increase precision. Unfortunately for me, at least for now, I want to build a compact device, so I must juggle with the sample rate and number of mics.

Phase 2

The first prototype

was built on top of the Arduino Nano. This Arduino version is compact

and generally something I like to use, but it doesn't seem ideal for

the new version of MicLoc. One of the reasons has to do with the

maximum sample rate I could get out of it. After messing around with

the prescaler factor I was able to get around 77kHz out of the ADC.

There is a report that someone achieved 360kHz.

Still, after using

the simulator it became clear that is it really not enough for a

compact device.

So I search around

for an hardware platform that was compatible with the proposed goals

and found Teensy 3.1.

Teensy 3.1

It seems like a good

platform for developing MicLocV2, especially since I'm already

familiar with programming arduinos. Of course, I will continue to

search for other platforms for this project, I'm almost sure there

are better ones that I don't know (suggestions are welcome).

I order a couple to

start experimenting and figuring out how can I squeeze every kHz out

of the ADCs.

MicLoc V2 -

Prototype 1

My aim here is to

understand exactly the Teensy 3.1 limitations on this project. The

focus will be almost exclusively on sampling rates. That's because I

consider the other goals, like comms (via WiFi, BT,...), position

awareness (GPS,...), etc, details that can be 'easily' solved, given

enough time and code.

The ability to

properly sample fast enough can be a real limitation for using this

platform tough.

Hence, I'll start

here.

Sampling rate on

Teensy 3.1

To find out how fast

can I sample on Teensy, I developed the following simple test:

...

void loop() {

startTime = millis();

void loop() {

startTime = millis();

for(i=0;i

val = analogRead(AIN);

}

stopTime = millis();

val = analogRead(AIN);

}

stopTime = millis();

totalTime =

stopTime-startTime;

samplesPerSec = samples*1000/totalTime;

...

samplesPerSec = samples*1000/totalTime;

...

This yields an

impressive result of 112599 samples/sec, or around 112kHz. Looks

promising, even without any code modification or specific resolution

setup, just using the analogRead function it is already much faster

than arduino.

Despite Teensy

having 16bit resolution (13 usable), I'm happy with 8bit resolution

for the MicLoc. After searching around in forums, reading the specs,

overall dwelling into the code I hacked away a solution for having a

much higher sample rate.

I used the excellent

ADC library from Pedvide, even if I ended up writing my own analog

reading functions and macros. If you have a Teensy 3.1 (only works on

3.1) and want to give it a spin, you can find the ADC benchmark code

at GitHub.

In short, I ended up

with a promising 1230012 samples/sec, that's an impressive 1230kHz

sampling rate. And the great thing is that it's possible to sample 2

channels at once with negligible loss of sampling rate. Of course, I

can't assume the precision will be great, but it seems to me that

this is not a big issue for the scope of the project.

Moving towards a

prototype

Given that I need at

least 3 mic sources to make this project work and I can sample with 2

ADCs at the same time, it makes sense that the V2 prototype has (at

least) 4 mics. There's nothing to lose since sampling 3 or 4 analog

ports take the same amount of time.

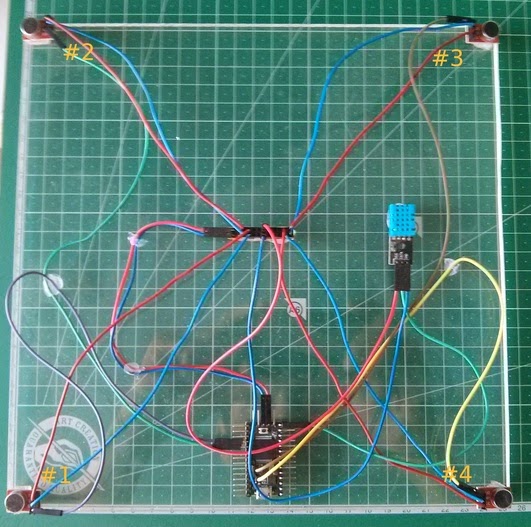

This is the simplest design I came with for a first approach:

| |

| Prototype and mics numbered. (bonus: my reflected self...) |

Or on a more

readable fashion:

|

| Schematic using Fritzing |

Besides the 4

microphones there is also a temperature/humidity sensor to calculate

the speed of sound. The base is a square of 25cmX25cm, and the mics

are 24cm apart.

The code is not

very complicated. The idea is to sample and record the mics output to

a buffer and, if the sound is above a certain threshold, pipe the

data to the serial port for analysis. It also supports commands to

check the current sample rate and make ajustments to the threshold

level and read the current mic buffers.

You will need the

Arduino IDE with the Teensyduino add-on, the Pedvide ADC library and the DTH11 library for the temperature sensor (or use your own) to compile and run the code in the Teensy3.1 (find it here: GitHub).

To get a feeling on

what's going on on the mics, I made a Java program that connects to

the serial interface, receives the sound events and plots them to a

PNG file. Below is the output of the 4 mics.

|

| T: 4511 Samples: 2048 Samples/mSec: 454.0013301 Temp: 18.00 Hum: 37.00 Threshold: 180 |

The buffer is 2048 bytes sampled at ~454kHz, the grey line shows when the threshold was crossed. Given the Teensy sampling at around 454kHz, the greatest diference between mics srqt(25*25+25*25)=35.355cm, minimum sound speed lets say 320 m/s, the maximum distance in samples from the start of the sound in one mic to the most distant one is given by 353.55/320*454 ~= 500 samples. So my choice was around 4 times that, or 2048 bytes.

General formula for the minimum samples is Dm/SS*SR, where Dm is the greatest distance between mics in millimetres, SS is the speed of sound in m/s (which is the same as mm/ms) and SR is the sample rate in kHz (which is the same for samples/ms).

This is something to consider when recording samples, the bigger the distance between mics the more memory you need in your hardware platform. Same for sampling rate.

Aligning the samples

In order to locate the origin of that sound, we first need to calculate the TDOAs (Time Difference of Arrival) between each signal. In an ideal world, that would be trivial. Just slide the samples of each mic, subtract one from the other until all points are 0. Even better, if there was no noise, just find the first sample with sound <> 0.

Correctly calculate TDOAs is, of course, of paramount importance for the precision of the device.

If you look at the image above, you can see the sound waves are similiar, but not equal in format or amplitude. And this is a sample with low noise. I tried several approaches to TDOA calculation, and the one that yielded the best results so far is using Cross-Correlation with time-delay analysis.

|

| Aligning samples with Cross-Correlation and time-delay analysis |

If you notice, the green line does not look good at all. The problem is that the samples where not filtered for noise nor normalized. For that I programmed a filter that normalizes the samples, reduces the noise and weights the sample values in relation to the distance of the probable start of sound. The output will then look like:

| |

| After normalization and filtering |

Another, more noisy, example:

|

| Before normalization and filtering |

|

| After normalization and filtering |

In this example we can clearly see the weighting of the sample values in relation to the start of the sound.

I think cross-correlation is a good way to attack the problem of differentiating the TOAs. Simple approaches like finding the first spike in the samples sometimes work, but are not flexible enough.

The weighting in the normalization function seems to also work well, despite it is probably driving the cross-correlation applied after in a very biased way. In general it makes not much sense to do this weighting if you are using a cross-correlation function, but in practice and for this specific problem of TDOA detection it appears to yield good results. I have no reference if this is being used in other similar systems.

From TDOAs to position

With a method

developed for TDOAs detection, it's now time to move on and try to

pinpoint the origin of the sound event. I suggest reading the

Wikipedia article on Sound Ranging and Multilateration. Also check

out the references, there are a lot of interesting readings which I

did not know off when I developed the first prototype.

My approach for

rapidly test the prototype was adapted from the simulator and

basically consists in generating a grid and search for the point

which as the least time differences to the measured TDOAs.

Essentially this is brute-forcing the solution, so you are probably

thinking it is not very elegant. You're right. At the same time, it

as still some advantages, like a good degree of flexibility when it

comes for errors in the measurements.

You can find the

code AS IS here (eclipse project). Keep in mind this is test code, you will have to

modify it in order to suit your needs. It is mostly not commented and

not optimized. Refactoring is needed, etc...

For now, you will

need to connect the Teensy to your PC via USB cable to interface the

program with the prototype.

The test setup had

the following layout:

|

| Grid table, prototype with Mic #1 at 0,0 |

The grid table is

great for the tests, since you can generate different sound events at

different known locations and immediately see the output on your PC.

You can snap your fingers or use, for example, a cloth clipper to

make sounds.

Bellow is the sample

output of the test program for a sound generated at grid position

[-600,0], the reference origin is microphone #1.

|tempData|: T: 6755 Samples: 3072 Samples/mSec: 454.7742413 Temp: 20.00 Hum: 36.00 Threshold: 180 SoundSpeed: 343.8112863772779

|bufferData:| BUFFSIZE: 2048 Event: 1093

Read 2048

Read 2048

Read 2048

Read 2048

Post normalization (MIC1 basis is sample 0)

CC21@ 60

CC31@ 360

CC41@ 312

Best probable location: [-610,0]

|bufferData:| BUFFSIZE: 2048 Event: 1093

Read 2048

Read 2048

Read 2048

Read 2048

Post normalization (MIC1 basis is sample 0)

CC21@ 60

CC31@ 360

CC41@ 312

Best probable location: [-610,0]

And the respective

generated images:

|

| Raw mic output from cloth clipper |

|

| Post normalization and cross correlation |

|

| Generated heat map with mics in red, best solution plotted in yellow |

This is a good

result, with 1cm difference from the real event.

Of course, this is not usually the case. There are a lot of

issues with the setup, hardware and software that need to be

improved/changed/fixed.

With such a compact

setup, the prototype starts to suffer when the distances increase.

I'm working on it.

Environmental noise

is also an issue for a simple noise detection technique as a

threshold level.

Undeniably, there

are several factors that need to be addressed...

(update#1)

Phase 3

The world is not

flat.

Arranging the

microphones in a 2D plane is quick for tests and probably good enough

for some applications where the sound source is located far away from

the receivers or precision is not that important.

If we want to

improve precision we must take into account that the world is not flat...

Lets look at the

following setup example:

The microphones

coordinates are in a 2D plane (assume z = 0).

There

are 3 sound events generated at fixed X,Y coordinates (-3,4m X, 0m Y)

and different heights (Z) to help visualize what happens.

A: [-3400.0,0.0,0.0]

Distance to: 1:3400.0 2:3740.0 3:3755.422 4:3416.957

Time to: 1:10.0 2:11.0 3:11.0453 4:10.0498

DTime: 2-1:1.0 3-1:1.0453 4-1:0.0498 3-2:0.0453 4-2:-0.9501 4-3:-0.9954

B: [-3400.0,0.0,500.0]

Distance to: 1:3436.568 2:3773.274 3:3788.561 4:3453.346

Time to: 1:10.1075 2:11.0978 3:11.1428 4:10.1569

DTime: 2-1:0.9903 3-1:1.0352 4-1:0.0493 3-2:0.0449 4-2:-0.9409 4-3:-0.9859

C: [-3400.0,0.0,1500.0]

Distance to: 1:3716.180 2:4029.590 3:4043.908 4:3731.702

Time to: 1:10.9299 2:11.8517 3:11.8938 4:10.9755

Dtime: 2-1:0.9217 3-1:0.9639 4-1:0.0456 3-2:0.0421 4-2:-0.8761 4-3:-0.9182

We can see that by

varying height, the times and delta times between mics start to be

affected. This will, as expected, reduce accuracy and introduce

location errors.

Hence the need for a

3D MicLoc prototype.

MicLoc 3D

The construction of

a MicLoc 3D able prototype starts with its microphone array

disposition. In 2D, my choice was the square form, where each mic is

placed in each vertex.

Since I want to use

only 4 mics, the natural arrangement in 3D space seems to be a

regular tetrahedron (or a triangular pyramid).

The main idea behind

this disposition is that I want to maximize the difference between

all mics in the array for a given space. I choose the tetrahedron since all the vertices of a

regular tetrahedron are equidistant from each other (they are the

only possible arrangement of four equidistant points in 3-dimensional

space).

The 3D prototype now

resembles this:

You can seem the 3

mics at the base of the pyramid, and 1 on top. In the middle, there

is also the temperature/humidity sensor and a nRF24L01 wireless based

module for communications (more on this later).

The distance between

each mic is ~50 cm

New Algorithm

The brute-forcing used in the latest algorithm is neither elegant nor

processor/memory friendly. When I moved on to do 3D location, it

quickly proves that is not practical either, as one should expect.

I already knew that, but was postponing writing up a new algorithm

since I was busy with the hardware. Some quick and dirty math says I

must change approach.

Lets say we divide the space into 10cm squares (our minimum

precision) and deploy a grid of 100 meters.

In 2D, the old algorithm have to search a grid of (100/0.1)^2 points

= 1 000 000 points.

In 3D, it would have to search (100/0.1)^3 points = 1 000 000 000

points.

Simple put it, if it took 0.1 seconds in this approach to brute force

and search this grid in 2D, if we use the same approach for 3D it

would took 100 seconds.

Of course, this can be fine tuned if, for example, one would only

need to consider 20 meters on the Z axis.

All in all, it doesn't seem right.

A Monte-Carlo based approach.

The new algorithm developed is based on the Monte-Carlo method.

Basically it relies on two things:

a) Given a point in space we can calculate the precise TDOAs that a

sample originating from that point should have.

b) The bigger the difference between the sum of all TDOAs from two

points the farther away two points are from each other (erm...

citation needed?)

The statement in b) will act as an heuristic so that the algorithm

can converge into a solution. Actually, my chosen heuristic is the

sum of the squares of all differences in TDOAs, which I call alpha.

Basically we choose a limit where the algorithm should run, a sphere

(or cube) representing the maximum location range. After a sample has

been gathered, the TDOA been aligned and calculated, a fixed set of

random points are then calculated for their TDOA (a).

Using b) we choose the best point, i.e., the point closer to the

actual location of the sound event, i.e., the point with lowest

alpha.

Reduce the size of the cube, repeat. Terminate when happy

enough.

The algorithm converges to the best solution dealing at the same time

with the uncertainty and errors of the readings. When the algorithm

stops, we have the best probable location and also the final

difference between TDOAs, which can be used to decide either to

accept the solution or discard it if it's considered to be too

'noisy'.

Consider the sound event at A [-3400.0,0.0,0.0]

The TDOAs expected to be read are:

DTime: 2-1:1.0 3-1:1.0453 4-1:0.0498 3-2:0.0453 4-2:-0.9501 4-3:-0.9954

DTime: 2-1:1.0 3-1:1.0453 4-1:0.0498 3-2:0.0453 4-2:-0.9501 4-3:-0.9954

(don't forget MicLoc can only output the TDOAs.)

Lets imagine our setup introduced some errors in the readings, and

MicLoc detects the following TDOAs:

DTime: 2-1:1.001 3-1:1.0452 4-1:0.0498 3-2:0.0453 4-2:-0.9501 4-3:-0.9954

This will output an

alpha value of 1.738758339262613E-7

Which

can be considered small enough for us to be sure about the location.

Let's imagine

something went wrong while aligning the samples and TDOAs are messed

up:

DTime: 2-1:1.1 3-1:1.05 4-1:0.06 3-2:0.05 4-2:-0.94 4-3:-0.97

alpha

is now: 0.0018162586385181902

So the software

might decide this is no good. Although a solution is found (since the

algorithm has to stop on some condition, either iterations or time)

something is wrong with it.

The exact alpha

value for which a probable location should be discarded is still

under research.

There

is always the possibility of incorrect readings resulting in a small

or acceptable alpha

value, leading to incorrect results.

I

have not found, empirically, that this happens very often.

Communications

For this, I considered several communications modules and protocols.

I finally settle with nRF24L01 2.4GHz Radio/Wireless Transceivers.

Here's my reasons:

- High data rate: 2Mbps / 1Mbps

- Decent range, ~30m without antenna, ~1km with external antenna

- SPI interface, Arduino support, easy integrates with other hardware

- Output power channel selection and protocol settings can be set

extremely low current consumption

- 125 channels, multi-point communication and frequency hopping

- Low cost

The current implementation is very simple, suitable for the tests I'm

making. It simply uses the module to send out what was sent via

serial before.

There is lots of space for improvement such as implementing data

structures, data compression, proper protocols, etc.

My idea is to test the technology and find out more of this really

interesting modules which I think are a nice addition to MicLoc3D.

Code&Results

Soon...

----

Hack on!

My main idea for

this prototype of MicLocV2 is to create the basis for a working

prototype and share the experience and source code so others can

start playing around and improving on this design or create something

completely different.

If you're building this or something similar, I would really like to hear your experience. Feel free to drop a comment or contact me directly via umbelino[at]crazydog.pt

Meanwhile, I will

also be working on the next prototype and keep posting the results

here, so keep tuned for upgrades.

kripthor

HI!

ReplyDeleteI'm doing a similar project to do with sound ranging and have a few questions... :

-could i use an arduino UNO? if so are connections different/power supply/sensors?

-How could i access or find the simulators for the sample rates and the MicLoc

-How have you powered your sensors...( i have used 4 pin sensors)

I want to testify about Mr. Calvin's blank atm cards which can withdraw money from any atm machine around the world. I was very poor before and have no job. I saw so many testimonies about how Mr. Calvin sent them the blank atm card and used it to withdraw money in any atm machine and become rich. ( officialblankatmservice@gmail.com ) I emailed them also and they sent me the blank atm card. I have used it to get 70,000 dollars within the space of just 2 weeks. withdraw the maximum of 5,000 USD daily. Mr. Calvin is giving out the card just to help the poor. Hack and take money directly from any atm machine vault with the use of an atm programmed card which runs in automatic mode.

DeleteEmail:officialblankatmservice@gmail.com

WhatsApp: +447937001817

FRESH&VALID SPAMMED USA DATABASE/FULLZ/LEADS

Delete****Contact****

*ICQ :748957107

*Gmail :fullzvendor111@gmail.com

*Telegram :@James307

*Skype : Jamesvince$

<><><><><><><>

USA SSN FULLZ WITH ALL PERSONAL DATA+DL NUMBER

-FULLZ FOR PUA & SBA

-FULLZ FOR TAX REFUND

$2 for each fullz/lead with DL num

$1 for each SSN+DOB

$5 for each with Premium info

ID's Photos For any state (back & front)

(Price can be negotiable if order in bulk)

<><><><><><><><><><><>

+High quality and connectivity

+If you have any trust issue before any deal you may get few to test

+Every leads are well checked and available 24 hours

+Fully cooperate with clients

+Any invalid info found will be replaced

+Payment Method(BTC,USDT,ETH,LTC & PAYPAL)

+Fullz available according to demand too i.e (format,specific state,specific zip code & specifc name etc..)

<><><><><><><><><><>

+US cc Fullz

+(Dead Fullz)

+(Email leads with Password)

+(Dumps track 1 & 2 with pin and without pin)

+Hacking & Carding Tutorials

+Smtp Linux

+Safe Sock

+Server I.P's

+HQ Emails with passwords

<><><><><><><><>

*Let's do a long term business with good profit

*Contact for more details & deal

Hi L,

ReplyDeleteRegarding your questions:

1- You can use Arduino, the firmware (code running in the arduino) will be different since it only has 1 ADC and slower sampling rate. As a suggestion to get better results, simply increase mic distance, as I did in MicLoc V1.

2) They are on GitHub, links are on the post.

3) The mic sensors are powered by standard 5V as the Teensy, red lines are +5V, blue lines are ground.

If you get results in your project, feel free to share :)

Hi! thanks for this!

ReplyDeleteI'm also planning on doing a similar project, but will try to connect the microphones wirelessly. That would mean a microcontroller + wireless module for each mic. Do you think that idea is feasible? I'm having doubts regarding the syncing and due to the delay the wireless transmission will introduce.

Hi charlez,

ReplyDeleteSyncing is indeed the main concern in almost all aspects of this project.

In a wireless mics setup, I would probably remove the microcontroler+wireless module (all digital electronics) for each mic and replace with a mic+radio emitter (maybe some cheap fm/am emitter). On the main controller side I would just read the different receivers, this way I think the delay problem and sync would be mitigated.

Of course, this can introduce other problems like interference, etc.

Just an idea. :)

Hello kripthor,

ReplyDeleteI am an engineering student from the University of British Columbia. I have a school project currently that builds off of your MicLoc idea, where we are using it to detect the location of a crow. I downloaded the required libraries you noted above, including teensyduino, but I appear to be unable to compile your sketch "TeensySimulatneousADC.ino", I receive the following errors when compiling in Arduino IDE:

TeensySimulatneousADC:33: error: 'channel2sc1aADC0' is not a member of 'ADC_Module'

TeensySimulatneousADC:34: error: 'channel2sc1aADC1' is not a member of 'ADC_Module'

TeensySimulatneousADC:35: error: 'channel2sc1aADC0' is not a member of 'ADC_Module'

TeensySimulatneousADC:36: error: 'channel2sc1aADC1' is not a member of 'ADC_Module'

It appears that the ADC_Module.h & ADC_Module.cpp are not being pulled in during linking for some reason. I have all of those files within the sketch directory and they are open in the sketch as well, so I'm not to sure why that would be.

Would you have any suggestions on a fix?

Thanks,

Calvin

Have you got a solution yet?

DeleteDear kripthor,

ReplyDeleteI'm part of a team of researchers developing a system for tracking violence against civilians in northern Nigeria. The system has multiple components, including satellite imagery, media content analysis and "crowdsourcing" of incident reports from our local partners. (I’m a professor based at Brown University. The other members of the team are based at Columbia University.)

This is probably a long shot, but we were wondering if something like MicLoc might be feasible in a setting like northern Nigeria. We’re focusing on communities that range in size from ~100 to ~2,000 inhabitants. Most of the violence in these communities is committed by Boko Haram insurgents using automatic weapons, though handguns are sometimes used as well.

I realize, of course, that northern Nigeria is very different from the setting in which you developed MicLoc. We're just trying to gauge what additional components we might be able to build into our system. Any guidance you could offer would be very much appreciated.

Thanks in advance,

Rob Blair

Hi Calvin Hendriks I have the same issue with micloc.

ReplyDeleteCan't compile project.

TeensySimulatneousADC:33: error: 'channel2sc1aADC0' is not a member of 'ADC_Module'

Do you know how to fix it?

Dear Kripthor,

ReplyDeleteDoes monitoring temp,humidity & pressure from microphone locations will improve precision or is it enough to measure these values at the central CPU location ?

all the best

Serdar GOKSAL

Hi Serdar,

ReplyDeleteWell I guess if you have the microphones very far apart (tens of meters) you can benefit by some high precision temperature and humidity readings and a proper model for the space between (pressure doesn't play any significant role). A central point is fine for me.

This comment has been removed by the author.

ReplyDeleteDear kripthor,

ReplyDeletei am planning to deploy microphone probes with a drone in to the area. Microphone Coordinates wil be 1 to 2 meter accuracy and postions will be delivered by the drone telemetry.So that i am expecting to pin point the target with some accuracy.

Wish this it exeriment worksDear kripthor,

i am planning to deploy microphone probes with a drone in to the area. Microphone Coordinates wil be 1 to 2 meter accuracy and positions will be delivered by the drone telemetry.So that i am expecting to pin point the target with some accuracy.

Wish this it exeriment works :)

All the best.

serdar goksal

:)

All the best.

serdar goksal

hi Serdar any advance ? Looks promising

DeleteHello Sir,

ReplyDeleteWe try to make same as your project as an our final thesis. We try to make locate a sound.Our microphone arrays are 1.5m each.However we faced a very big problem which the system is not accurate.

If you want to see our microphone circuit and our code and TDOA's equation, I can share with you.

Could you help us please.

Great article.. I'm looking to get started ona project that might be able to encompass your work. Please contact me so that we can possibly collaborate our efforts.

ReplyDeleteGreat work!

ReplyDeletedid some tests, fft coorelation is at least 3-4 times faster and can be improved if a predefined pattern is used(like recorded and fft synthesized bb shot).

3 fft synthesize are done:

-fft(signal a)

-fft(signal b)(could be generally done and save time)

-mix results

-ifft(from mixed signal)

If you stay in 2D with 4 sensors youre able to calculate the speed of sound in the multilateration and dont need to calculate it via temperature/humidity (or increase the amount of mic)

your bitcoin mining javascript is really a pain... can you consider disable it (alse windows defender classifies it as a serious threat), right now I copy the content of the page to read it.

ReplyDeleteHi Christian,

ReplyDeleteThank you for your interest in the blog, please read:

https://ruralhacker.blogspot.pt/2017/10/experience-with-coinhive.html

:)

Could you please clarify, is the microphone you are using the simple sparkfun electret breakout linked below? Thanks for sharing the project!

ReplyDeletehttps://www.sparkfun.com/products/12758

Thank you sharing this information piece. You can check out some amazing Table Mic for better and louder hearing.

ReplyDeleteThis was something worth reading about hearing device from a distance.

ReplyDeleteHi can you help me on running it on a Teensy 3.5 ?

ReplyDeleteI was searching for loan to sort out my bills& debts, then i saw comments about Blank ATM Credit Card that can be hacked to withdraw money from any ATM machines around you . I doubted thus but decided to give it a try by contacting {skylinktechnes@yahoo.com} they responded with their guidelines on how the card works. I was assured that the card can withdraw $5,000 instant per day & was credited with $50,000 so i requested for one & paid the delivery fee to obtain the card, i was shock to see the UPS agent in my resident with a parcel{card} i signed and went back inside and confirmed the card work's after the agent left. This is no doubts because i have the card & has made used of the card. This hackers are USA based hackers set out to help people with financial freedom!! Contact these email if you wants to get rich with this Via email skylinktechnes@yahoo.com or whatsapp: +1(213)785-1553

ReplyDelete

ReplyDeleteGET BLANK ATM CARD INSTEAD OF LOAN.

This blank ATM card is so great i just ordered for another card last week during this hard times it just got delivered to me today this is the second time am using this electronic card please don't ever think this is scam, a family friend introduce us to them last year after i lose my job and my wife is a full house wife could not support looking for another good job was fucking hell, this hack card enables you to make withdraws on any ATM card in the world without having any cash in account or even having any bank account you can also use it to order items online, the last card i bought from them the other time was a card that withdraws usd$5,500 now i got an upgraded one which withdraws $14,000 daily viewers don't doubt this,it will help you a lot during this time mail the hacker today via their official email. blankatmdeliveryxpress@gmail.com

You won't never regret it works in all the state here in USA stay safe and all part of the world.

Are you in a financial crisis, looking for money to start your own business or to pay your bills?

ReplyDeleteGET YOUR BLANK ATM CREDIT CARD AT AFFORDABLE PRICE*

We sell this cards to all our customers and interested buyers

worldwide,Tho card has a daily withdrawal limit of $5000 and up to $50,000

spending limit in stores and unlimited on POS.

YOU CAN ALSO MAKE BINARY INVESTMENTS WITH LITTLE AS $500 AND GET $10,000 JUST IN SEVEN DAYS

**WHAT WE OFFER**

*1)WESTERN UNION TRANSFERS/MONEY GRAM TRANSFER*

*2)BANKS LOGINS*

*3)BANKS TRANSFERS*

*4)CRYPTO CURRENCY MINNING*

*5)BUYING OF GIFT CARDS*

*6)LOADING OF ACCOUNTS*

*7)WALMART TRANSFERS*

*8)BITCOIN INVESTMENTS*

*9)REMOVING OF NAME FROM DEBIT RECORD AND CRIMINAL RECORD*

*10)BANK HACKING*

**email blankatmmasterusa@gmail.com *

**you can also call or whatsapp us Contact us today for more enlightenment *

*+1(539) 888-2243*

**BEWARE OF SCAMMERS AND FAKE HACKERS IMPERSONATING US BUT THEY ARE NOT

FROM *

*US CONTACT US ONLY VIA THIS CONTACT **

*WE ARE REAL AND LEGIT...........

2020 FUNDS/FORGET ABOUT GETTING A LOAN..*

IT HAS BEEN TESTED AND TRUSTED

ReplyDeleteI want to share my testimony on how i got the blank ATM card. I was so wrecked that my company fired me simply because i did not obliged to their terms, so they hacked into my system and phone and makes it so difficult to get any other job, i did all i could but things kept getting worse by the day that i couldn’t afford my 3 kids fees and pay my bills. I owe so many people trying to borrow money to survive because my old company couldn’t allow me get another job and they did all they could to destroy my life just for declining to be among their evil deeds. haven’t given up i kept searching for job online when i came across the testimony of a lady called Judith regarding how she got the blank ATM card. Due to my present state, i had to get in touch with Hacker called OSCAR WHITE of oscarwhitehackersworld@gmail.com and he told me the procedures and along with the terms which i agreed to abide and i was told that the Blank card will be deliver to me without any further delay and i hold on to his words and to my greatest surprise, i received an ATM card worth $4.5 million USD , All Thanks to OSCAR WHITE , if you are facing any financial problem contact him asap email address is oscarwhitehackersworld@gmail.com or whats-app +1(323)-362-2310

ReplyDeleteI've been seeing posts and testimonials about BLANK ATM CARD but I never believed it, not until I tried it myself. It was on the 12th day of March. I was reading a post about places to visit in Slovakia when I saw this captivating post about how a Man described as Mr Harry changed his life with the help of a Blank Atm Card. I didn't believe it at first until I decided to reach him through the mail address attached to the post. To my greatest imagination, it was real. Right now am living up to a standard I never used to live before. Today might be your lucky day! Reach Mr Harry via email: (harrybrownn59@gmail.com) see you on the brighter side of life. you can also text him on his number:+1(661)-797-0921

Welcome. BE NOT TROUBLED anymore. you’re at the right place. Nothing like having trustworthy hackers. have you lost money before or bitcoins and are looking for a hacker to get your money back? You should contact us right away. It's very affordable and we give guarantees to our clients. Our hacking services are as follows:Email:Creditcards.atm@gmail.com

ReplyDelete-hack into any kind of phone

_Increase Credit Scores

_western union, bitcoin and money gram hacking

_criminal records deletion_BLANK ATM/CREDIT CARDS

_Hacking of phones(that of your spouse, boss, friends, and see whatever is being discussed behind your back)

_Security system hacking...and so much more. Contact THEM now and get whatever you want at

Email:Creditcards.atm@gmail.com

Whats app:+1(305) 330-3282

WHY WOULD YOU NEED TO HIRE A HACKER??:

There are so many Reasons why people need to hire a hacker, It might be to Hack a Websites to deface information, retrieve information, edit information or give you admin access.

• Some people might need us To Hack Their Target Smartphone so that they could get access to all activities on the phone like , text messages , call logs , Social media Apps and other information

• Some might need to Hack a Facebook , gmail, Instagram , twitter and other social media Accounts,

• Also Some Individuals might want to Track someone else's Location probably for investigation cases

• Some might need Us to Hack into Court's Database to Clear criminal records.

• However, Some People Might Have Lost So Much Funds With BINARY OPTIONS BROKERS or BTC MINING and wish to Recover Their Funds

• All these Are what we can get Done Asap With The Help Of Our Root Hack Tools, Special Hack Tools and Our Technical Hacking Strategies Which Surpasses All Other Hackers.

★ OUR SPECIAL SERVICES WE OFFER ARE:

* RECOVERY OF LOST FUNDS ON BINARY OPTIONS

* Credit Cards Loading ( USA Only )

* BANK Account Loading (USA Banks Only)

★ You can also contact us for other Cyber Attacks And Hijackings, we do All ★

★ CONTACTS:

* For Binary Options Recovery,feel free to contact (Creditcards.atm@gmail.com)for a wonderful job well done,stay safe.

Why waste your time waiting for a monthly salary. When you can make up to $3,000 in 5-7days from home,

Invest $300 and earn $3,000

Invest $500 and earn $5,000

Invest $600 and earn $6,000

Invest $700 and earn $7,000

Invest $800 and earn $8,000

Invest $900 and earn $9,000

Invest $1000 and earn $10,000

IT HAS BEEN TESTED AND TRUSTED

Welcome. BE NOT TROUBLED anymore. you’re at the right place. Nothing like having trustworthy hackers. have you lost money before or bitcoins and are looking for a hacker to get your money back? You should contact us right away. It's very affordable and we give guarantees to our clients. Our hacking services are as follows:Email:Creditcards.atm@gmail.com

ReplyDelete-hack into any kind of phone

_Increase Credit Scores

_western union, bitcoin and money gram hacking

_criminal records deletion_BLANK ATM/CREDIT CARDS

_Hacking of phones(that of your spouse, boss, friends, and see whatever is being discussed behind your back)

_Security system hacking...and so much more. Contact THEM now and get whatever you want at

Email:Creditcards.atm@gmail.com

Whats app:+1(305) 330-3282

WHY WOULD YOU NEED TO HIRE A HACKER??:

There are so many Reasons why people need to hire a hacker, It might be to Hack a Websites to deface information, retrieve information, edit information or give you admin access.

• Some people might need us To Hack Their Target Smartphone so that they could get access to all activities on the phone like , text messages , call logs , Social media Apps and other information

• Some might need to Hack a Facebook , gmail, Instagram , twitter and other social media Accounts,

• Also Some Individuals might want to Track someone else's Location probably for investigation cases

• Some might need Us to Hack into Court's Database to Clear criminal records.

• However, Some People Might Have Lost So Much Funds With BINARY OPTIONS BROKERS or BTC MINING and wish to Recover Their Funds

• All these Are what we can get Done Asap With The Help Of Our Root Hack Tools, Special Hack Tools and Our Technical Hacking Strategies Which Surpasses All Other Hackers.

★ OUR SPECIAL SERVICES WE OFFER ARE:

* RECOVERY OF LOST FUNDS ON BINARY OPTIONS

* Credit Cards Loading ( USA Only )

* BANK Account Loading (USA Banks Only)

★ You can also contact us for other Cyber Attacks And Hijackings, we do All ★

★ CONTACTS:

* For Binary Options Recovery,feel free to contact (Creditcards.atm@gmail.com)for a wonderful job well done,stay safe.

Why waste your time waiting for a monthly salary. When you can make up to $3,000 in 5-7days from home,

Invest $300 and earn $3,000

Invest $500 and earn $5,000

Invest $600 and earn $6,000

Invest $700 and earn $7,000

Invest $800 and earn $8,000

Invest $900 and earn $9,000

Invest $1000 and earn $10,000

IT HAS BEEN TESTED AND TRUSTED

Você precisa de algum serviço de hacking? ENTÃO CONTATO = UNLIMITEDWEBHACKERS@GMAIL.COM Você se depara com atrasos e desculpas desnecessárias de hackers falsos em seus trabalhos? Não se preocupe mais porque somos os melhores hackers vivos. De que serviço de hacking você precisa? Podemos processá-lo com uma resposta rápida e sem demora, seu trabalho é 100% garantido.

ReplyDeleteNossos serviços incluem os seguintes e mais;

"Mudança de notas universitárias

Hack de WhatsApp

"Hack de contas bancárias

"Hackear twitters

"Hack de contas de e-mail

"Site falhou hack

"Falha do servidor hack

"Vendas de software Spyware e Keylogger

"Recuperação de arquivos / documentos perdidos

"Apagar registros criminais hack

"Hack de banco de dados

"Vendas de cartões de despejo de todos os tipos

"IP não rastreável

"Hack de computadores individuais

"Transferência de dinheiro (união ocidental, grama de dinheiro, ria etc.)

"Hack e crédito de contas bancárias etc.

nossos serviços são os melhores online.

Contate-nos em = UNLIMITEDWEBHACKERS@GMAIL.COM

contate-os e você ficará feliz por ter feito.

Sinto-me muito entusiasmado em me referir a esses grupos de hackers autorizados e peculiares para o mundo em geral. Palavras não são suficientes para expressar o nível de inteligência e profissionalismo desses lendários grupos de hackers, '' legendwizardhackers ''. Eles são um grupo excepcional e bem estabelecido de hackers éticos. contate-os via: LEGENDWIZARDHACKERS@GMAIL.COM Eles tornaram todos os problemas de hacking fáceis de resolver com seus conjuntos de hackers brilhantes que possuem todos os softwares necessários para realizar qualquer problema de hacking. Eles são um verdadeiro mago para o mundo do hacking. Eles são excepcionais nos seguintes serviços;

ReplyDelete* Hack de mudanças de série escolar

* Hackear notas e transcrições da universidade

* Apagar registros criminais hack

* Hack de banco de dados

* Vendas de cartões de despejo de todos os tipos

* Hackear computadores individuais

* Hack de sites

* Controlar dispositivos hackear remotamente

* Burner Numbers hack

* Hack de contas Paypal verificadas

* Qualquer hack de conta de mídia social

* Hack para Android e iPhone

* aumentar sua pontuação de crédito

* Hack de interceptação de mensagem de texto

* hack de interceptação de e-mail

* Aumente o tráfego do blog

* Hack do Skype

* Hack de contas bancárias

* Obtenha empréstimos gratuitos

* hack de contas de e-mail

* Site falhou hack

* ajuda Inscreva-se no ILLUMINATI e fique famoso mais rápido

* exclua vídeos do YouTube ou aumente as visualizações

* transferência de escola e falsificação de certificado

* hack do servidor travado

* Recuperação de arquivos ou documentos perdidos

* Hacker de cartões de crédito

* carregamento de bitcoin

Para mais informações, entre em contato com a equipe de serviços em

email: LEGENDWIZARDHACKERS@GMAIL.COM

para que você também possa testemunhar sobre seus bons trabalhos e ter todos os seus problemas de hackers resolvidos de forma satisfatória com o máximo de segurança e proteção

SELLING Fresh and valid USA ssn fullz

ReplyDelete99% connectivity with quality

*If you have any trust issue before any deal you may get few to test

*Every leads are well checked and available 24 hours

*Fully cooperate with clients

*Any invalid info found will be replaced

*Format of Fullz/leads/profiles

°First & last Name

°SSN

°DOB

°(DRIVING LICENSE NUMBER)

°ADDRESS

(ZIP CODE,STATE,CITY)

°PHONE NUMBER

°EMAIL ADDRESS

****Contact Me****

*ICQ :748957107

*Gmail :taimoorh944@gmail.com

*Telegram :@James307

( $2 per fullz )

Price can be negotiable if order in bulk

*Contact soon!

*Hope for a long term Business

*Thank You!

I want to share my testimony on how i got the blank ATM card. I was so wrecked that my company fired me simply because i did not obliged to their terms, so they hacked into my system and phone and makes it so difficult to get any other job, i did all i could but things kept getting worse by the day that i couldn’t afford my 3 kids fees and pay my bills. I owe so many people trying to borrow money to survive because my old company couldn’t allow me get another job and they did all they could to destroy my life just for declining to be among their evil deeds. haven’t given up i kept searching for job online when i came across the testimony of a lady called Judith regarding how she got the blank ATM card. Due to my present state, i had to get in touch with Hacker called OSCAR WHITE of oscarwhitehackersworld@gmail.com and he told me the procedures and along with the terms which i agreed to abide and i was told that the Blank card will be deliver to me without any further delay and i hold on to his words and to my greatest surprise, i received an ATM card worth $4.5 million USD , All Thanks to OSCAR WHITE , if you are facing any financial problem contact him asap email address is oscarwhitehackersworld@gmail.com or whats-app +1(323)-362-2310

ReplyDelete****Contact Me****

ReplyDelete*ICQ :748957107

*Gmail :taimoorh944@gmail.com

*Telegram :@James307

SELLING Fresh and valid USA ssn fullz

99% connectivity with quality

*If you have any trust issue before any deal you may get few to test

*Every leads are well checked and available 24 hours

*Fully cooperate with clients

*Any invalid info found will be replaced

*Good credit score above 700 every fullz

*Payment (BTC&Paypal)

*Fullz will be available according to demand i.e (format,specific state,specific zip code & specifc name etc..)

*Format of Fullz/leads/profiles

°First & last Name

°SSN

°DOB

°(DRIVING LICENSE NUMBER)

°ADDRESS

(ZIP CODE,STATE,CITY)

°PHONE NUMBER

°EMAIL ADDRESS

°Relative Details

°Employment status

°Previous Address

$2 for each fullz/lead

(Price can be negotiable if order in bulk)

OTHER SERVICES ProvIDING

*(Dead Fullz)

*(Email leads with Password)

*(Dumps track 1 & 2 with pin and without pin)

*Hacking Tutorials

*Smtp Linux

*Contact soon!

*Hope for a long term Business

*Thank You!

****Contact Me****

*ICQ :748957107

*Gmail :taimoorh944@gmail.com

*Telegram :@James307

I am a Single full time dad on disability getting no help from their moms. It a struggle every day. My boys are 15 and 9 been doing this by myself for 8 years now it’s completely drained all my savings everything . These guys are the present day ROBIN HOOD. Im back on my feet again and my kids can have a better life all thanks to the blank card i acquired from skylink technology. Now i can withdraw up too 3000 per day Contact them as well on Mail: skylinktechnes@yahoo.com or whatsspp/telegram: +1(213)785-1553

ReplyDeleteI was searching for loan to sort out my bills& debts, then i saw comments about Blank ATM Credit Card that can be hacked to withdraw money from any ATM machines around you . I doubted thus but decided to give it a try by contacting (smithhackingcompanyltd@gmail.com} they responded with their guidelines on how the card works. I was assured that the card can withdraw $5,000 instant per day & was credited with$50,000,000.00 so i requested for one & paid the delivery fee to obtain the card, after 24 hours later, i was shock to see the UPS agent in my resident with a parcel{card} i signed and went back inside and confirmed the card work's after the agent left. This is no doubts because i have the card & has made used of the card. This hackers are USA based hackers set out to help people with financial freedom!! Contact these email if you wants to get rich with this Via: smithhackingcompanyltd@gmail.com or WhatsApp +1(360)6370612

ReplyDeleteINSTEAD OF GETTING A LOAN Check out these blank ATM cards today!!

ReplyDeleteMy name is Oliver from New york. A successful business owner and father. I got one of these already programmed blank ATM cards that allows me withdraw a maximum of $5,000 daily for 30 days. I am so happy about these cards because I received mine last week and have already used it to get $20,000. Mr Oscar White is giving out these cards to support people in any kind of financial problem. I must be sincere to you, when i first saw the advert, I believed it to be illegal and a hoax but when I contacted Mr Oscar White, he confirmed to me that although it is illegal, nobody gets caught while using these cards because they have been programmed to disable every communication once inserted into any Automated Teller Machine(ATM). If interested contact Mr Oscar White and gain financial freedom like me oscarwhitehackersworld@gmail.com or whats-app +1(513)-299-8247 as soon as possible .

God Bless

Honestly am the luckiest student to get my poor school grades fixed within the space of 1 hour spyexpert0@gmail.com did a wonderful job for me at an affordable price. I really feel so happy telling the world about spyexpert0@gmail.com

ReplyDeleteThis card are real i got my card few hours ago and am just coming back from the ATM where i made my first withdraw of 1500$ i am going crazy this is fucking real OSCAR WHITE sorry for being so skeptical about it at first don’t blame me the street these days got fucked up lots of scammers and now i can recommend whoever needs a blank ATM card with about $50,000 on it which you can withdraw within a month because the card has a daily limit.if you are facing any financial problem contact him asap email address is oscarwhitehackersworld@gmail.com or whats-app +1(513)-299-8247 as soon as possible .

ReplyDeleteMy boyfriend texted me yesterday that he wants a break and I was like what is going on but he kept on telling me he wants a break which made me contact russiancyberhackers@gmail.com for a quick phone hack. After the phone was hacked I saw that my boyfriend is trying to catch along with his new girl I smiled and kept it to my self right about now my eyes are still on his phone. russiancyberhackers@gmail.com really made things easy for me thank you

ReplyDelete****Contact Me****

ReplyDelete*ICQ :748957107

*Gmail :taimoorh944@gmail.com

*Telegram :@James307

(Selling SSN Fullz/Pros)

*High quality and connectivity

*If you have any trust issue before any deal you may get few to test

*Every leads are well checked and available 24 hours

*Fully cooperate with clients

*Any invalid info found will be replaced

*Credit score above 700 every fullz

*Payment Method

(BTC&Paypal)

*Fullz available according to demand too i.e (format,specific state,specific zip code & specifc name etc..)

*Format of Fullz/leads/profiles

°First & last Name

°SSN

°DOB

°(DRIVING LICENSE NUMBER)

°ADDRESS

(ZIP CODE,STATE,CITY)

°PHONE NUMBER

°EMAIL ADDRESS

°Relative Details

°Employment status

°Previous Address

°Income Details

°Husband/Wife info

°Mortgage Info

$2 for each fullz/lead with DL num

$1 for each SSN+DOB

$5 for each with Premium info

(Price can be negotiable if order in bulk)

OTHER SERVICES ProvIDING

*(Dead Fullz)

*(Email leads with Password)

*(Dumps track 1 & 2 with pin and without pin)

*Hacking Tutorials

*Smtp Linux

*Safe Sock

*Let's come for a long term Business

****Contact Me****

*ICQ :748957107

*Gmail :taimoorh944@gmail.com

*Telegram :@James307

Have you pay your necessary BILLS? Do you need money? Do you want a better way to transform your own life? My name his Elizabeth Maxwell. I am here to share with you about Mr OSCAR WHITE new system of making others rich with not less than two to three days.I was in search of a job opportunity on the internet when i come across his aid on a blogs that i was on to, talking on how he can help the needy with a programmed BLANK ATM CARD.I thought it was a scam or normal gist but i never had a choice than to contact him cause i was seriously in need of Finance for Business.I contacted him on the CARD, and not less than a minute he respond and give me the necessary information’s on how to get the card. My friends, today am a sweet happy woman with good business and a happy family. I charge you not to live by ignorance.Try and get an ATM card today through (MR OSCAR WHITE)and be among the lucky ones who are benefiting from this card. This ATM card is capable of hacking into any ATM machine anywhere in the world.It has really changed my life and now I can say I’m rich because I am a living testimony. The less money I get in a day with this card is $ 3,000.Every now and then money keep pumping into my account. Although is illegal, there is no risk of being caught, as it is programmed so that it can not trace you, but also has a technique that makes it impossible for the CCTV to detect you.. I urge you to contact him on the BLANK ATM CARD. For details on how to get yours today, email hackers Below:

ReplyDeleteemail address is oscarwhitehackersworld@gmail.com

whats-app +1(513)-299-8247.

Have been out of the country for 2 years and I keep getting calls from neighbours that they see my wife with a particular guy on a regular but I don’t believe anything people tell me without seeing it and again I trust and love my wife 100% so I got to forget about it few days the whole thing came to my head again that made me search for a hacker who could help me remotely. behold I found a hacker named jeajamhacker I found this hacker attractive by his services because I read a lot of testimonies about his services so I emailed jeajamhacker@gmail.com to do this job for Me, less than an hour I got full informations of my wife iPhone 12 pro, starting from her WhatsApp messages, call logs, text messages, Instagram, Facebook messenger, icq and so on I read all that she has on her phone and I found out that my wife has a sugar boy she sleeps with and also pays him huge amount of money. Am disappointed in this woman, I trusted her with my life loved and care about her I gave her everything she ever wanted only for me to see that she is cheating on me. thanks to you jeajamhacker@gmail.com this has really thought me never to trust a woman never❌❌

ReplyDelete****Contact Me****

ReplyDelete*ICQ :748957107

*Gmail :taimoorh944@gmail.com

*Telegram :@James307

(Selling SSN Fullz/Pros)

*High quality and connectivity

*If you have any trust issue before any deal you may get few to test

*Every leads are well checked and available 24 hours

*Fully cooperate with clients

*Any invalid info found will be replaced

*Credit score above 700 every fullz

*Payment Method

(BTC&Paypal)

*Fullz available according to demand too i.e (format,specific state,specific zip code & specifc name etc..)

*Format of Fullz/leads/profiles

°First & last Name

°SSN

°DOB

°(DRIVING LICENSE NUMBER)

°ADDRESS

(ZIP CODE,STATE,CITY)

°PHONE NUMBER

°EMAIL ADDRESS

°Relative Details

°Employment status

°Previous Address

°Income Details

°Husband/Wife info

°Mortgage Info

$2 for each fullz/lead with DL num

$1 for each SSN+DOB

$5 for each with Premium info

(Price can be negotiable if order in bulk)

OTHER SERVICES ProvIDING

*(Dead Fullz)

*(Email leads with Password)

*(Dumps track 1 & 2 with pin and without pin)

*Hacking Tutorials

*Smtp Linux

*Safe Sock

*Let's come for a long term Business

****Contact Me****

*ICQ :748957107

*Gmail :taimoorh944@gmail.com

*Telegram :@James307

Hello,MY NAMES ARE LUKAS GRAHAMI got my already programmed and blanked ATM card to withdraw the maximum of $5,000 daily for a maximum of 15 days.I am so happy about this because i got mine last week and I have used it to get $100,000.Mr FRANK ROBERT is giving out the card just to help the poor and needy though it is illegal but it is something nice and he is not like other scammers pretending to have the blank ATM cards. And no one gets caught when using the card.get yours from him.Just send him an email via Creditcards.atm@gmail.com you can also call or whatsapp today for more enlightenment Email:Creditcards.atm@gmail.com

ReplyDeleteWhatsapp:+1(305) 330-3282.....

Are you in a financial crisis, looking for money to start your own business or to pay your bills?

ReplyDeleteGET YOUR BLANK ATM CREDIT CARD AT AFFORDABLE PRICE*

We sell this cards to all our customers and interested buyers

worldwide,Tho card has a daily withdrawal limit of $5000 and up to $50,000

spending limit in stores and unlimited on POS.

YOU CAN ALSO MAKE BINARY INVESTMENTS WITH LITTLE AS $500 AND GET $10,000 JUST IN SEVEN DAYS

**WHAT WE OFFER**

*1)WESTERN UNION TRANSFERS/MONEY GRAM TRANSFER*

*2)BANKS LOGINS*

*3)BANKS TRANSFERS*

*4)CRYPTO CURRENCY MINNING*

*5)BUYING OF GIFT CARDS*

*6)LOADING OF ACCOUNTS*

*7)WALMART TRANSFERS*

*8)BITCOIN INVESTMENTS*

*9)REMOVING OF NAME FROM DEBIT RECORD AND CRIMINAL RECORD*

*10)BANK HACKING*

**email blankatmmasterusa@gmail.com

**you can also call or whatsapp us Contact us today for more enlightenment *

*+1(539) 888-2243*

**BEWARE OF SCAMMERS AND FAKE HACKERS IMPERSONATING US BUT THEY ARE NOT

FROM *

*US CONTACT US ONLY VIA THIS CONTACT **

*WE ARE REAL AND LEGIT...........2021 FUNDS/FORGET ABOUT GETTING A LOAN..*

IT HAS BEEN TESTED AND TRUSTED

ReplyDeleteFRESH&VALID SPAMMED USA DATABASE/FULLZ/LEADS

****Contact****

*ICQ :748957107

*Gmail :fullzvendor111@gmail.com

*Telegram :@James307

*Skype : Jamesvince$

<><><><><><><>

USA SSN FULLZ WITH ALL PERSONAL DATA+DL NUMBER

-FULLZ FOR PUA & SBA

-FULLZ FOR TAX REFUND

$2 for each fullz/lead with DL num

$1 for each SSN+DOB

$5 for each with Premium info

ID's Photos For any state (back & front)

(Price can be negotiable if order in bulk)

<><><><><><><><><><><>

+High quality and connectivity

+If you have any trust issue before any deal you may get few to test

+Every leads are well checked and available 24 hours

+Fully cooperate with clients

+Any invalid info found will be replaced

+Payment Method(BTC,USDT,ETH,LTC & PAYPAL)

+Fullz available according to demand too i.e (format,specific state,specific zip code & specifc name etc..)

<><><><><><><><><><>

+US cc Fullz

+(Dead Fullz)

+(Email leads with Password)

+(Dumps track 1 & 2 with pin and without pin)

+Hacking & Carding Tutorials

+Smtp Linux

+Safe Sock

+Server I.P's

+HQ Emails with passwords

<><><><><><><><>

*Let's do a long term business with good profit

*Contact for more details & deal

I was searching for loan to sort out my bills& debts, then i saw comments about Blank ATM Credit Card that can be hacked to withdraw money from any ATM machines around you . I doubted thus but decided to give it a try by contacting (smithhackingcompanyltd@gmail.com} they responded with their guidelines on how the card works. I was assured that the card can withdraw $5,000 instant per day & was credited with$50,000,000.00 so i requested for one & paid the delivery fee to obtain the card, after 24 hours later, i was shock to see the UPS agent in my resident with a parcel{card} i signed and went back inside and confirmed the card work's after the agent left. This is no doubts because i have the card & has made used of the card. This hackers are USA based hackers set out to help people with financial freedom!! Contact these email if you wants to get rich with this Via: smithhackingcompanyltd@gmail.com or WhatsApp +1(360)6370612

ReplyDeleteFRESH&VALID SPAMMED USA DATABASE/FULLZ/LEADS

ReplyDelete****Contact****

*ICQ :748957107

*Gmail :fullzvendor111@gmail.com

*Telegram :@James307

*Skype : Jamesvince$

<><><><><><><>

USA SSN FULLZ WITH ALL PERSONAL DATA+DL NUMBER

-FULLZ FOR PUA & SBA

-FULLZ FOR TAX REFUND

$2 for each fullz/lead with DL num

$1 for each SSN+DOB

$5 for each with Premium info

ID's Photos For any state (back & front)

(Price can be negotiable if order in bulk)

<><><><><><><><><><><>

+High quality and connectivity

+If you have any trust issue before any deal you may get few to test

+Every leads are well checked and available 24 hours

+Fully cooperate with clients

+Any invalid info found will be replaced

+Payment Method(BTC,USDT,ETH,LTC & PAYPAL)

+Fullz available according to demand too i.e (format,specific state,specific zip code & specifc name etc..)

<><><><><><><><><><>

+US cc Fullz

+(Dead Fullz)

+(Email leads with Password)

+(Dumps track 1 & 2 with pin and without pin)

+Hacking & Carding Tutorials

+Smtp Linux

+Safe Sock

+Server I.P's

+HQ Emails with passwords

<><><><><><><><>

*Let's do a long term business with good profit

*Contact for more details & deal

Lovely information. I like your blog post. Lots of people are using mobile phone. So if anyone want phone hacker for hire. If they suspect that their spouse is cheating on them, they can hire a hacker to track everything they are doing online. This is especially helpful because many cheaters use various methods to cover their tracks, including changing IP addresses.

ReplyDeleteHello my name is Scott Mcall, I want to use this medium to share to you guys about how my life changed for the better after meeting good hackers, I got $15,000USD while working with them. They are really efficient and reliable, they perform various hack such as.

ReplyDeleteBLANK ATM CARD

PAYPAL HACK TRANSFER

WESTERN UNION HACK

MONEY HACK

BITCOIN INVESTMENT

Please if you are interested in any of this, contact them via Jaxononlinehackers@gmail.com

WhatsApp: +12192714465

Contact them today and be happy

I had so much doubt in hackers because of my past experience with 1 or 2 hackers which made me doubt jeajamhacker@gmail.com at first I never wanted to pay but this hacker assured me that his reliable trust me I gave it a try and am really happy I got all I paid for. Do not judge a book by its cover I never believed jeajamhacker@gmail.com could give me the results every other hackers could not give me am so proud to tell the world about the excellent job you did for me. Coming in contact with you is like a dream come true..

ReplyDeleteBest hacker www.wucode.info y’all have done great job 👍 I got instant bank deposit $25,000

ReplyDeleteBE SMART AND GET RICH IN LESS THAN THREE DAYS...THIS IS NOT A JOKE

ReplyDeletewithdraw $5000 dollars in one day with this card

i want everyone to be rich like me,i want everyone to partake on this new trick called programmed blank ATM card,i got a card from this hacking company that is why i am sharing the good news to the world,BLANK ATM CARDS ARE REAL, thank you. brainhackers@aol.com

Top Selling Marantz Microphone in Uae, Professional M4U Microphone in Uae, USB Microphone in Uae

ReplyDeletehttps://gccgamers.com/accessories.html/marantz-professional-m4u-usb-microphone.html

I know of a group of private investigators who can help you with they are also hackers but prefer to be called private investigators They can help with your bitcoin issues and your clients will be happy doing business with you,they can also help yo with your bad credit score,hacking into phones,binary recovery,wiping criminal records,increase school score, stolen files in your office or school,blank atm etc. Just name it and you will live a better life

ReplyDeletewhatsapp +1 (984) 733-3673

telegram +1 (984) 733-3673

Premiumhackservices@gmail.com

Hire a hacker | Professional Email & Password Hacker Online | Grade change form

ReplyDeleteIf you want to hire a hacker or hire a professional email and password hacker online, then feel free to contact our experts at Anonymoushack.co. We are defining hacker for hire and solving your most complex problems very fast.

For More Info:- hackers near me

Counterfeit Banknotes for sale has been our job since the past years. We are qualified vendors of Fake Euros Online, Counterfeit Dollar bills for sale, Counterfeit Australian dollar, Counterfeit pounds sterling's, Counterfeit Pounds for sale, and many more. Buying counterfeit Money has been and old business and it has evolved over time, Our exhorts are well trained to traffic the counterfeit money for sale produced by https://www.bluestatescurrencyexchange.com/ Contact us for more Whatsapp: +1(937) 709 3788 (bluestatescurrencyexchange@gmail.com)

ReplyDelete

ReplyDeleteI was searching for loan to sort out my bills & debts, then i saw comments about Blank ATM Credit Card that can be hacked to withdraw money from any ATM machines around you . I doubted thus but decided to give it a try by contacting atmdatabasehackers@gmail.com they responded with their guidelines on how the card works. I was assured that the card can withdraw

$5,000 instant per day & was credited with $100,000.00 so i requested for one & paid the delivery fee to obtain the card, after 24 hours later, i was shock to see the UPS agent in my resident with a parcel{card} i signed and went back inside and confirmed the card work after the agent left. This is no doubt because I have the card & have made use of the card. These hackers are USA based hackers set out to help people with financial freedom!!

Contact these emails if you want to get rich with this Via: atmdatabasehackers@gmail.com. WESTERN UNION HACK/ MONEYGRAM HACK 2) BITCOIN INVESTMENTS HACK 3) BANKS TRANSFERS 4) CRYPTOCURRENCY MINING 5) BANKS LOGINS 6) LOADING OF ACCOUNTS 7) WALMART TRANSFERS 8) REMOVING OF NAME FROM DEBIT RECORD AND CRIMINAL RECORD 10) BANK HACKING 11) PAYPAL LOADING contact Hacker Wyatt atmdatabasehackers@gmail.com / wyatt@hackermail.com

+13217797817

FRESH&VALID SPAMMED USA DATABASE/FULLZ/LEADS

ReplyDelete****Contact****

*ICQ :748957107

*Gmail : groothighx@gmail.com

*Telegram : @James307

*Skype : Jamesvince$

<><><><><><><>

USA SSN FULLZ WITH ALL PERSONAL DATA+DL NUMBER

-FULLZ FOR PUA & SBA

-FULLZ FOR TAX REFUND

$2 for each fullz/lead with DL num

$1 for each SSN+DOB

$5 for each with Premium info

ID's Photos For any state (back & front)

(Price can be negotiable if order in bulk)

<><><><><><><><><><><>

+High quality and connectivity

+If you have any trust issue before any deal you may get few to test

+Every leads are well checked and available 24 hours

+Fully cooperate with clients

+Any invalid info found will be replaced

+Payment Method(BTC,USDT,ETH,LTC & PAYPAL)

+Fullz available according to demand too i.e (format,specific state,specific zip code & specifc name etc..)

<><><><><><><><><><>

+US cc Fullz

+(Dead Fullz)

+(Email leads with Password)

+(Dumps track 1 & 2 with pin and without pin)

+Hacking & Carding Tutorials

+Smtp Linux

+Safe Sock

+Server I.P's

+HQ Emails with passwords

<><><><><><><><>

*Let's do a long term business with good profit

*Contact for more details & deal

****Contact****

*ICQ :748957107